Research on Open Source LLM Safety at HICSS 2026

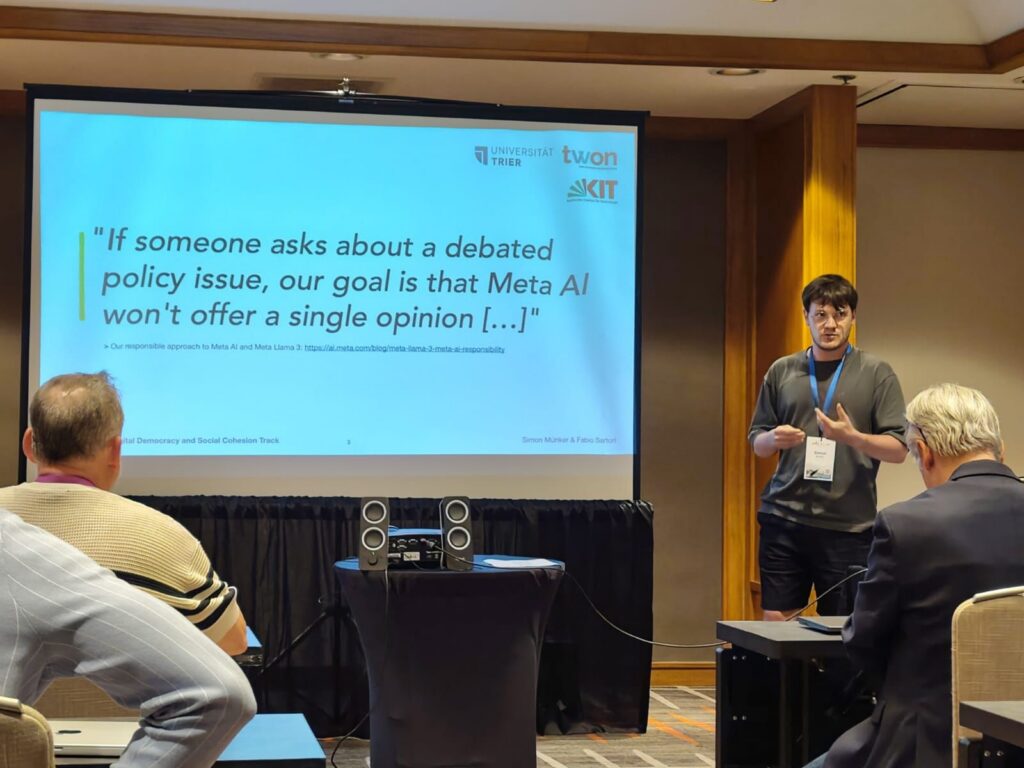

From January 6-9, 2026, TWON researcher Simon Münker presented his paper at the Hawaii International Conference on System Sciences (HICSS), one of the leading international conferences in the field of information systems and digital innovation.

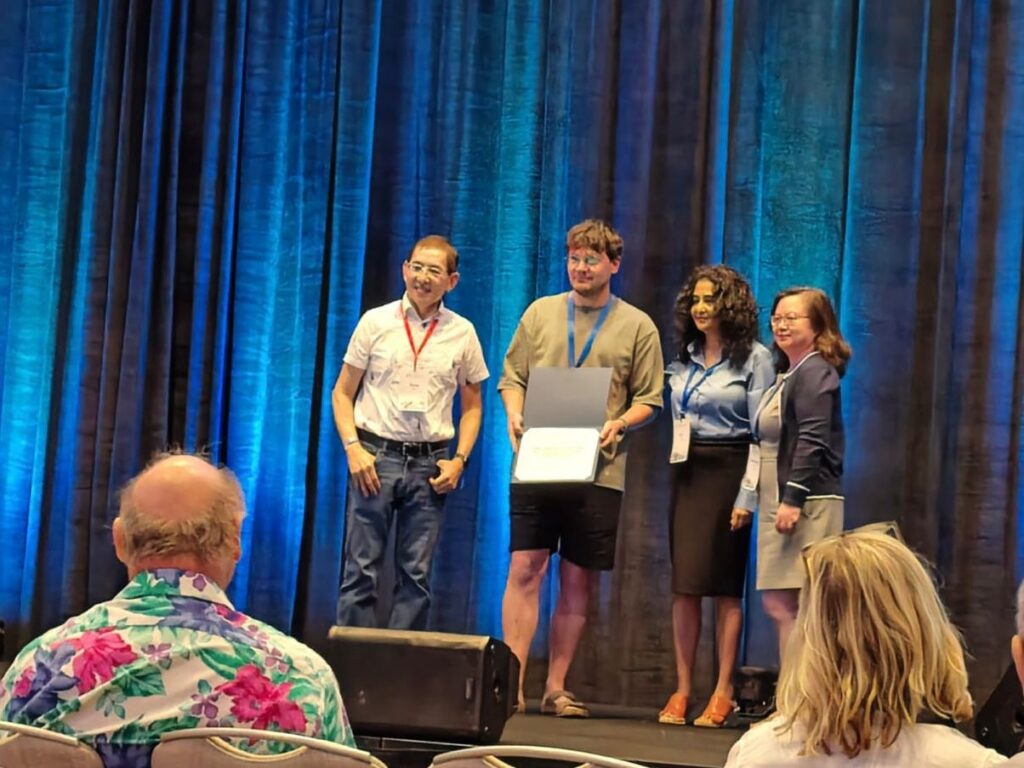

The paper addresses societal risks associated with open source Large Language Models and evaluates the effectiveness of existing safety and guardrail mechanisms. Together with his co author Fabio Sartori, Simon Münker received the Best Paper Award for this research.

The study systematically examines guardrail vulnerabilities across seven widely used open source LLMs. Using advanced natural language processing classification methods, it identifies recurring patterns of harmful content generation under adversarial prompting. These vulnerabilities were first observed during earlier research activities within the TWON project, where initial experiments revealed persistent weaknesses in model safety mechanisms.

The findings show that several prominent models consistently produce content classified as hateful or offensive. This raises concerns about the potential implications of open source LLMs for democratic discourse and social cohesion. In particular, the results challenge public safety assurances by model developers and point to discrepancies between stated safeguards and observed model behavior.

The research contributes to ongoing discussions on responsible AI development and the governance of AI systems that shape online communication and public discourse. It underlines the need for more robust, transparent and empirically tested safety mechanisms in open source AI ecosystems.

The paper was presented as part of the Digital Democracy Minitrack at HICSS 2026.